...

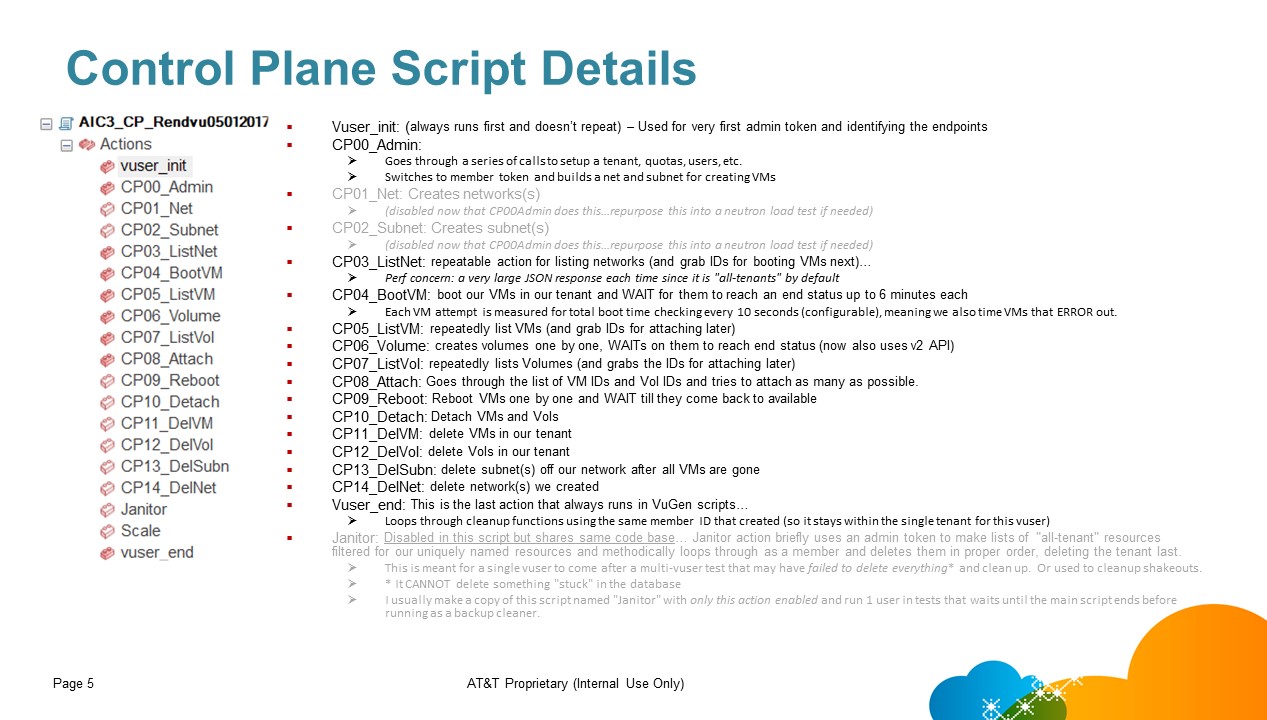

DelSubn: Delete subnet

DelNet: Delete network

There are also some heat templates used. But this is pretty much what happens for most things.

...

- Orchestration

- Ansible – create rally, shaker, os-faults and metrics gathering plugins

- Test Manager

- Resiliency Studio/Jenkins (AT&T Proposed – time to open source is Aug – Sept 2018)

- Start orchestrator runs

- Collect metrics

- Incorporate capability for SLA plugins

- SLA plugins will decide whether test is a success/failure

- Interact with GitHub/CI-CD environments

- Provide detailed logs and metrics for data

- Create bugs

- Orchestration

- Developer Tools

- Goal:

- Push what can be possible as far left into the development cycle

- Minimize resource utilization (financial & computational)

- Data Center Emulator

- Emulate reference architectures

- Run performance and failure injection scenarios

- Mathematically extrapolate for acceptable limits

- Goal:

- Define test scope & scenarios

- KPI/SLA

- What metrics are part of the KPI matrix

- Examples: Control Plane – API response time, Success Rate, RabbitMQ connection distribution, CPU/Memory utilization, I/O rate, etc.

- Examples: Data Plane – throughput, vrouter failure characteristics, storage failure characteristics, memory congestion, scheduling latency, etc.

- What are the various bounds?

- Examples: Control Plane - RabbitMQ connection distribution should be uniform within a certain std. deviation, API response times are lognormal distributed and not acceptable past 90 percentile, etc.

- Realistic Workload Models for control & data plane

- Realistic KPI models from operators

- Realistic outage scenarios

- What metrics are part of the KPI matrix

- Automated Test Case Generation

- Are there design & deployment templates that can be supplied so that an initial suite of scenarios is automatically generated?

- Top-Down assessment methodology to generate the scenarios – shouldn't burden developers with "paperwork".

- Performance

- Control Plane

- Data Plane

- Destructive

- Failure injection

- Scale

- Scale resources (cinder volumes, subnets, VMs, etc.)

- Concurrency

- Multiple requests on the same resource

- Operational Readiness

- What are we looking for here – just a shakeout to ensure a site is ready for operation? May be a subset of performance & resiliency tests?

- KPI/SLA

- Define reference architectures

- What are the reference architectures?

- H/W variety – where is it located?

- Deployment toolset for creating repeatable experiments – there is ENoS for container based deployments, what about other types?

- Deployment, Monitoring & Alerting templates

- Implementation Priorities

- Tackle Control Plane & Software Faults (rally + os-faults)

- Most code already there – need more plugins

- os-faults: More fault injection scenarios (degradations, core dumps, etc.)

- Rally: Randomized triggers, SLA extensions (e.g. t-test with p-values)

- Metrics gathering plugin

- Shaker enhancements (rally + shaker + os-faults)

- os-faults hooks mechanisms

- Storage, CPU/Memory (also cases with sr-iov, dpdk, etc.)

- os-faults for data plane software failures (cinder driver, vrouter, kernel stalls, etc.)

- Develop SLA measurement hooks

- os-faults underlay enhancements & data center emulator

- os-faults: Underlay crash & degradation code

- Data center emulator with ENoS to model underlay & software

- Traffic models & KPI measurement

- Realistic traffic models (CP + DP) and software to emulate the models

- Real KPI and scaled KPI to measure in virtual environments

- Tackle Control Plane & Software Faults (rally + os-faults)

...